Classification of electroencephalogram (EEG) / electrocorticogram (ECoG) signals during motor-imagery (MI)

exhibits significant application potential, including communication assistance and rehabilitation support

for patients with motor impairments.

These signals remain inherently susceptible to physiological artifacts (e.g., eye blinking, swallowing),

posing persistent challenges.

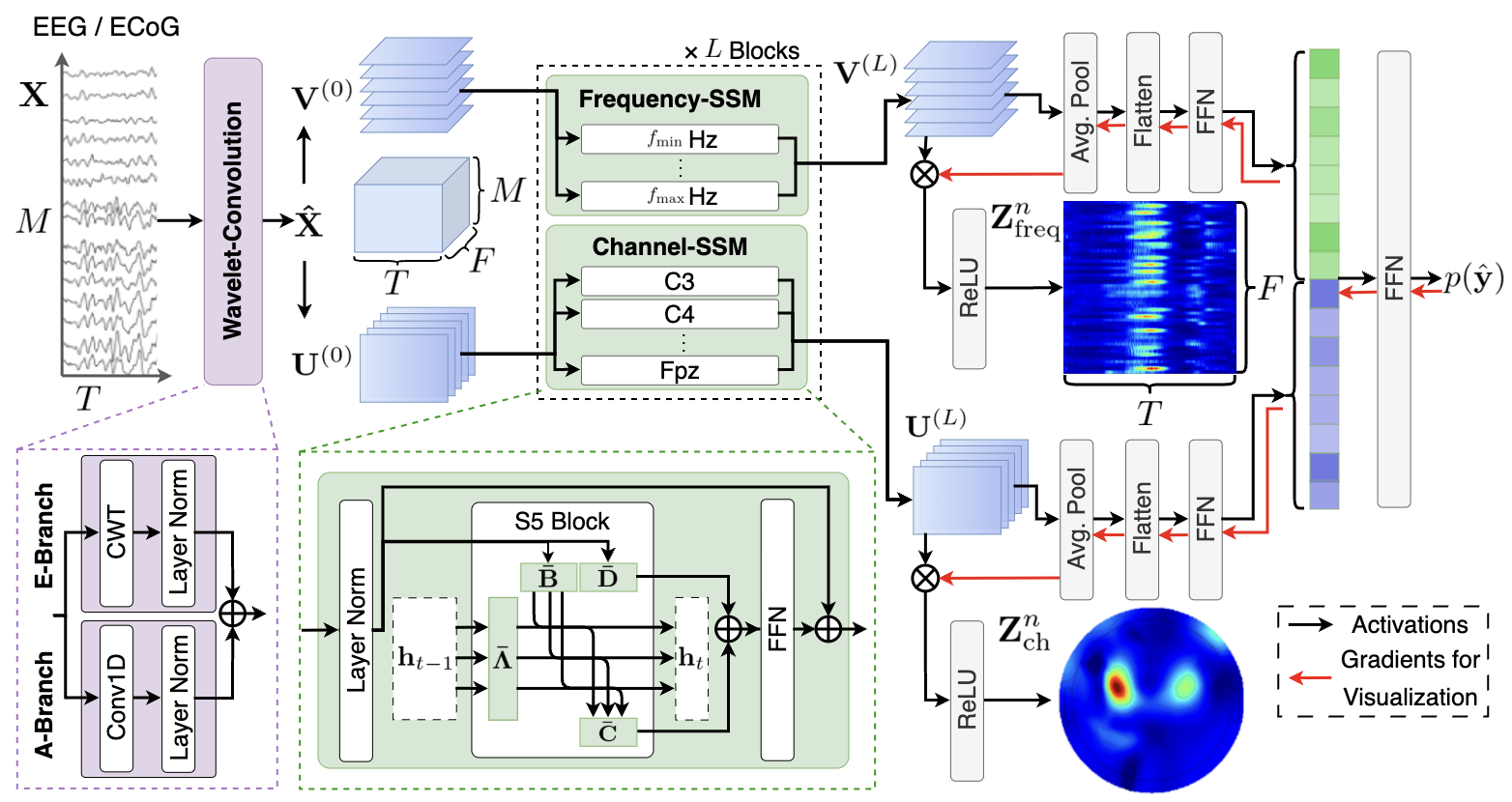

To overcome these limitations, we propose Cortical-SSM, a novel architecture that extends deep

state-space models to capture integrated dependencies of EEG/ECoG signals across temporal, spatial,

and frequency domains.

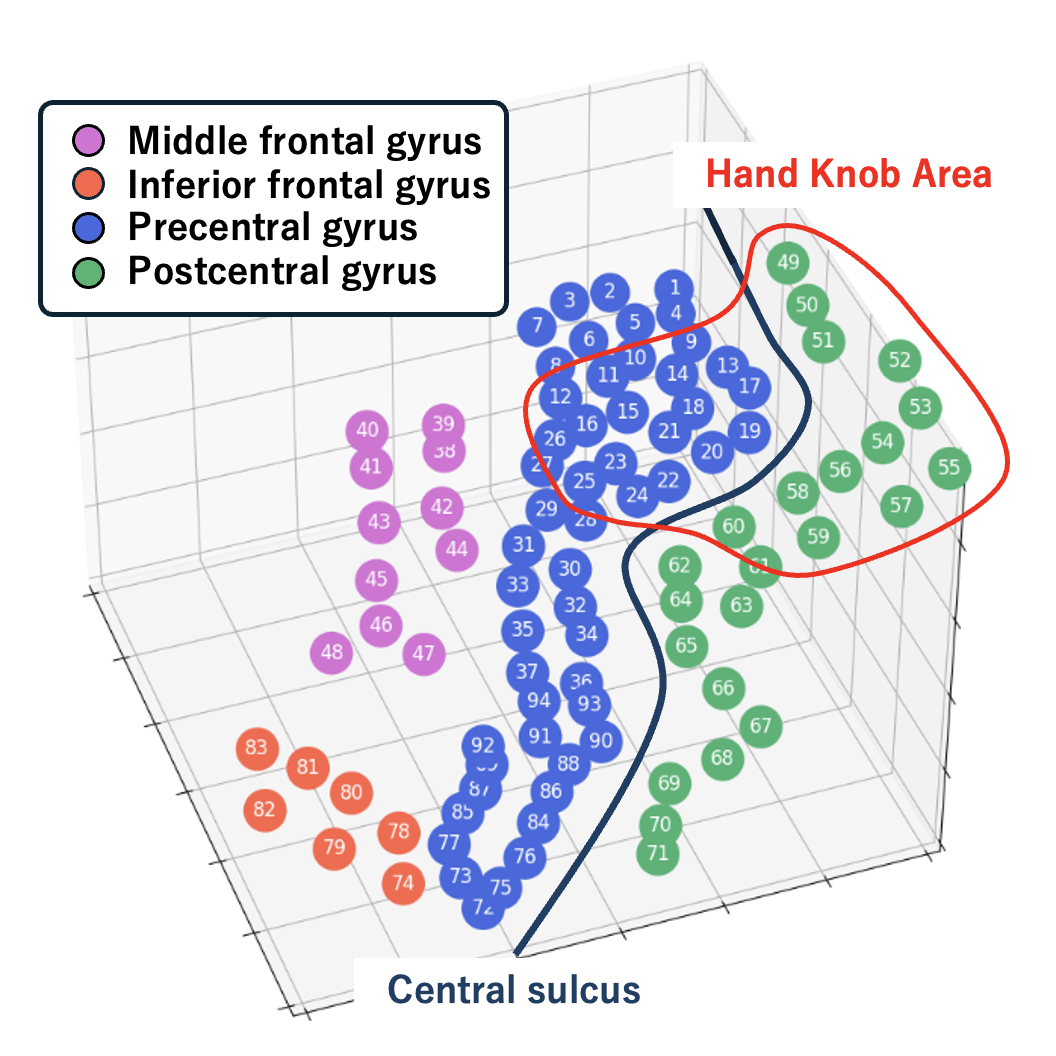

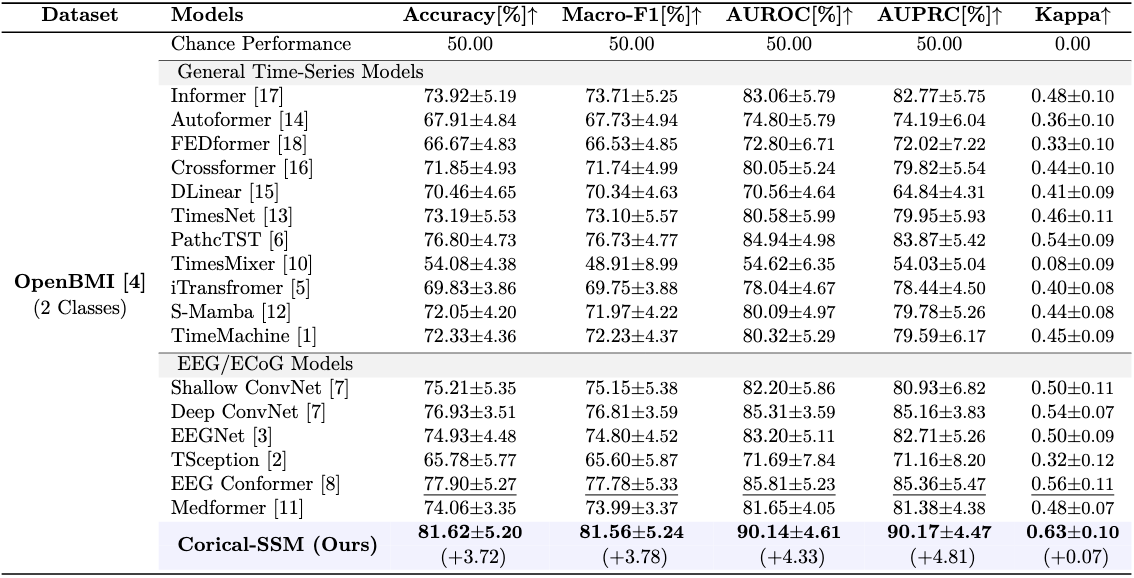

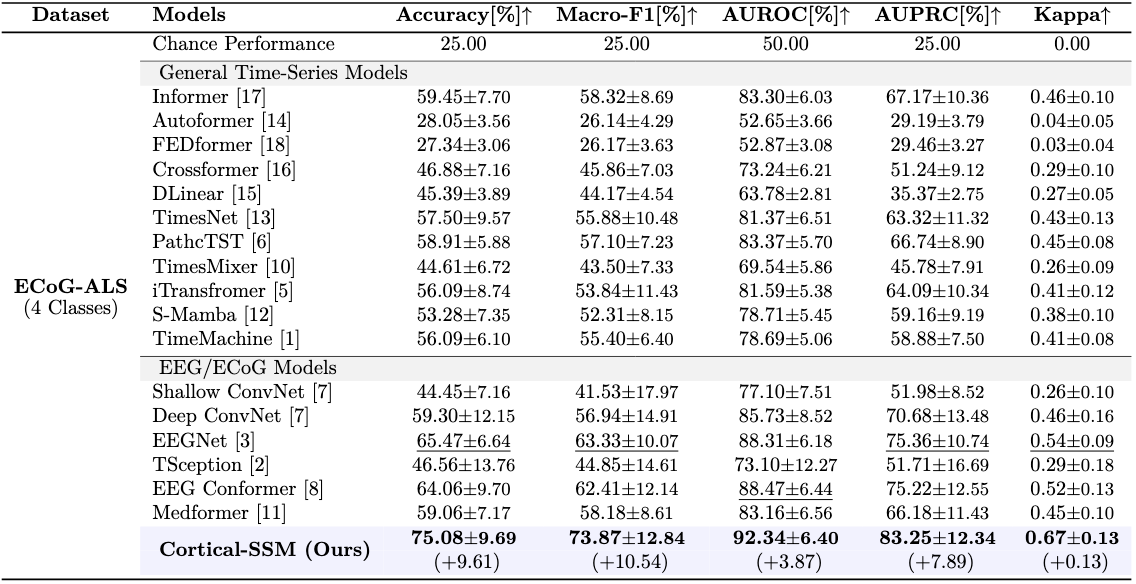

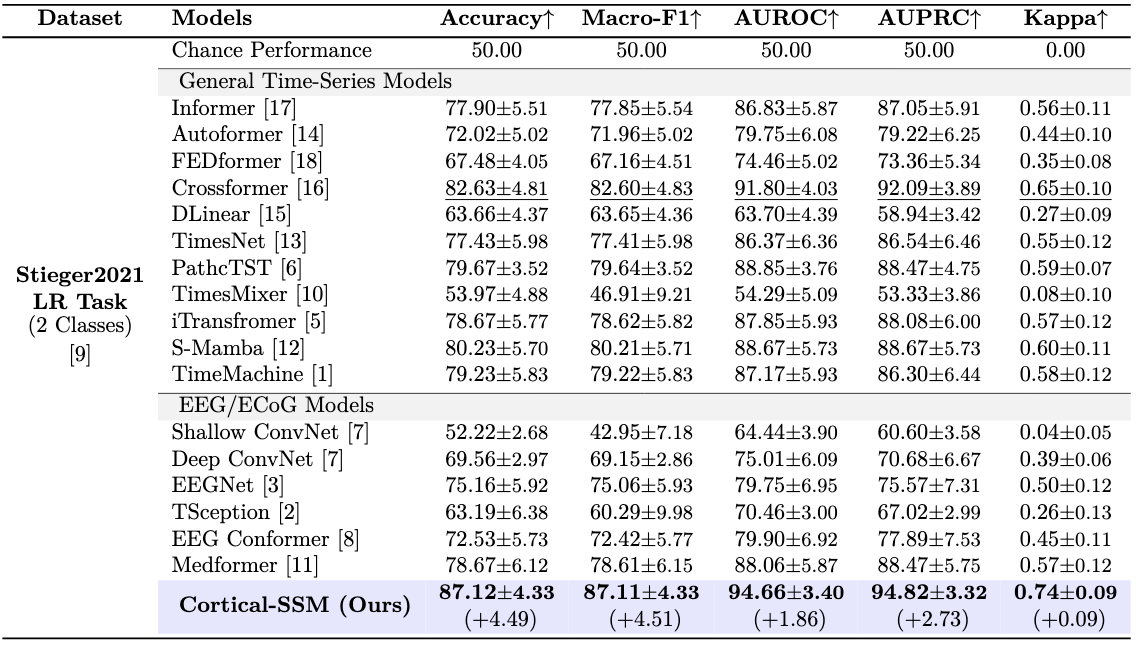

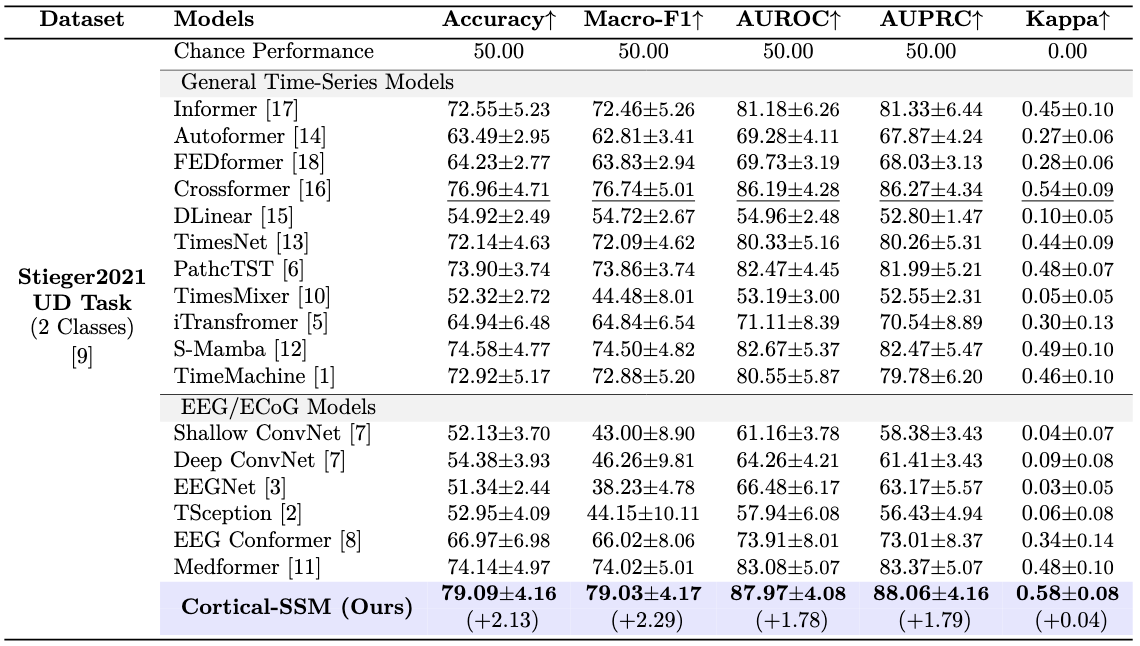

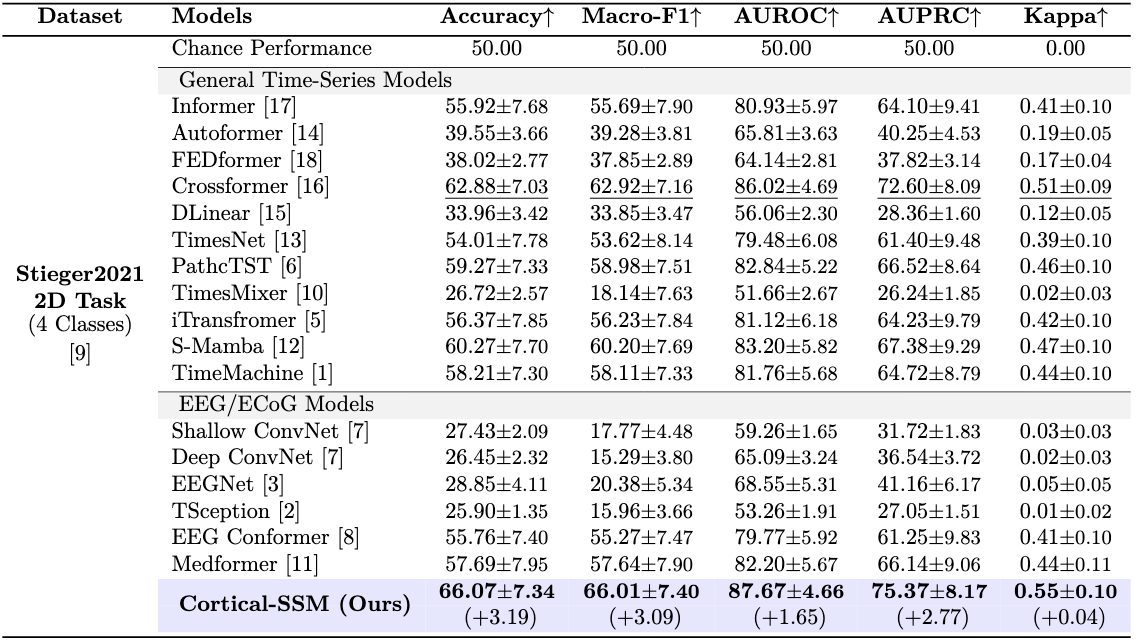

For evaluation, we conduct comprehensive evaluation across three benchmarks: 1) Two large-scale public MI

EEG datasets containing >50 subjects, 2) A clinical MI ECoG dataset recorded from an amyotrophic lateral

sclerosis patient.

Across these three benchmarks, our method outperformed baseline methods on standard evaluation metrics.

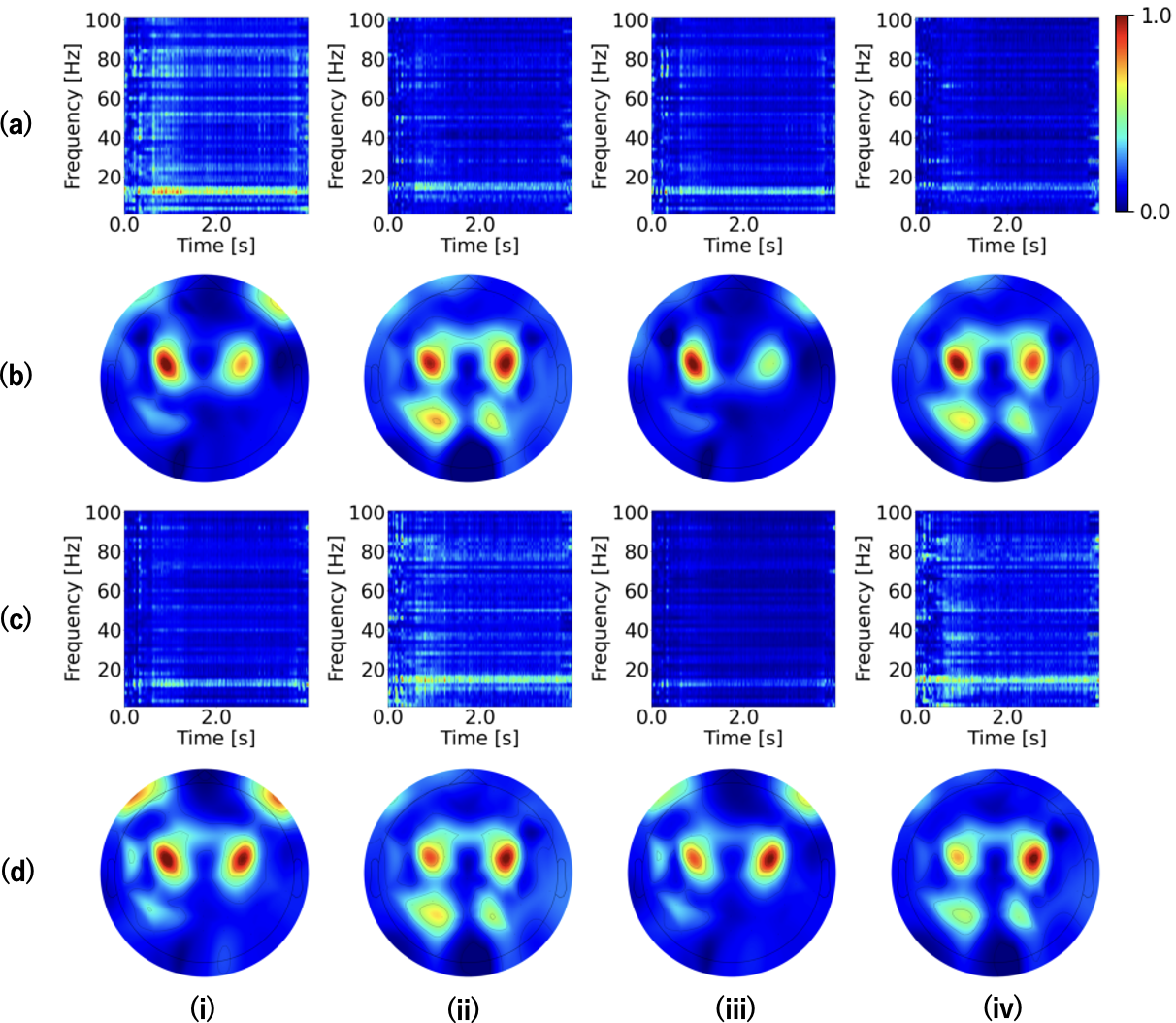

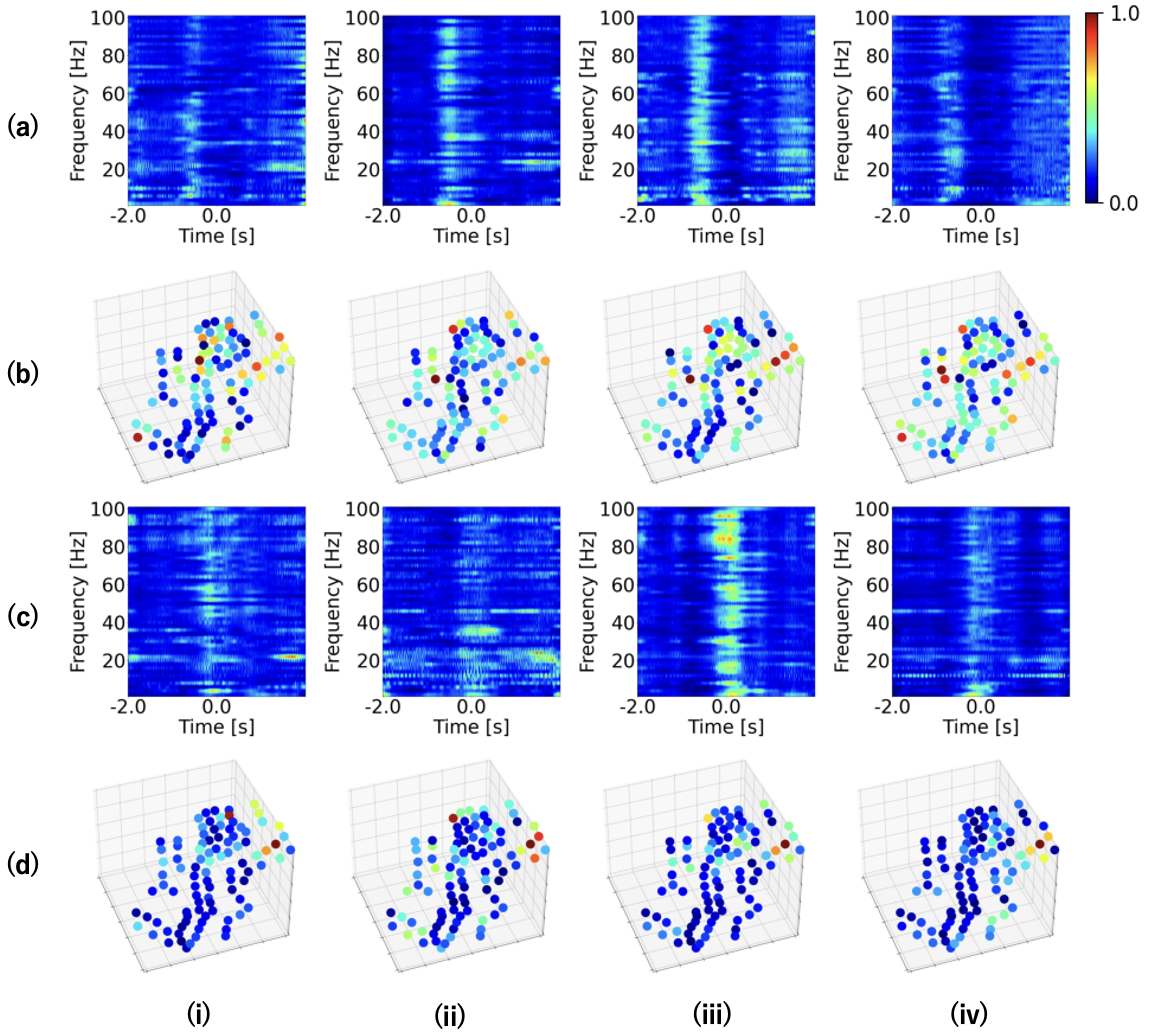

Furthermore, visual explanations derived from our model indicate that it effectively captures

neurophysiologically significant regions in both EEG/ECoG signals.